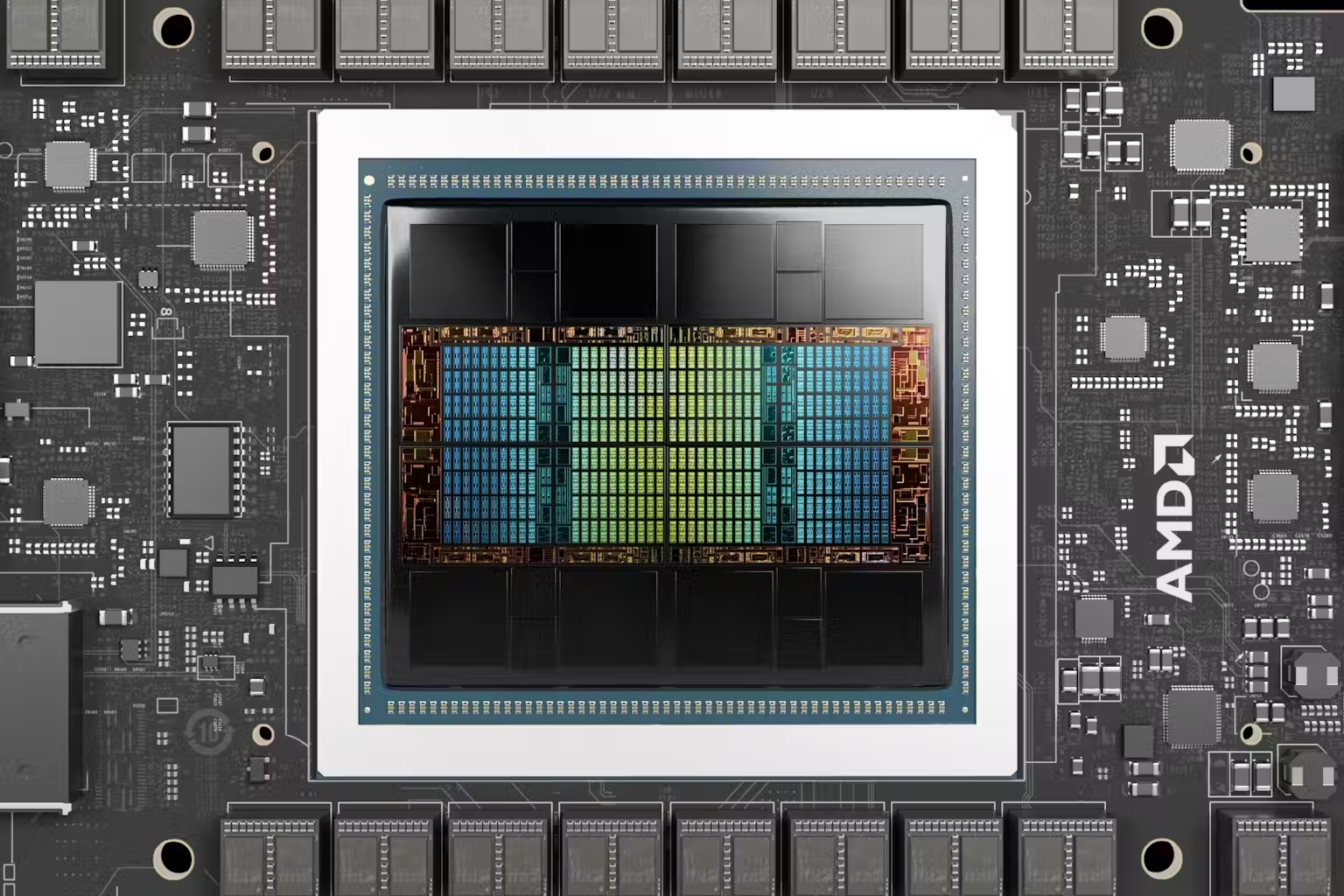

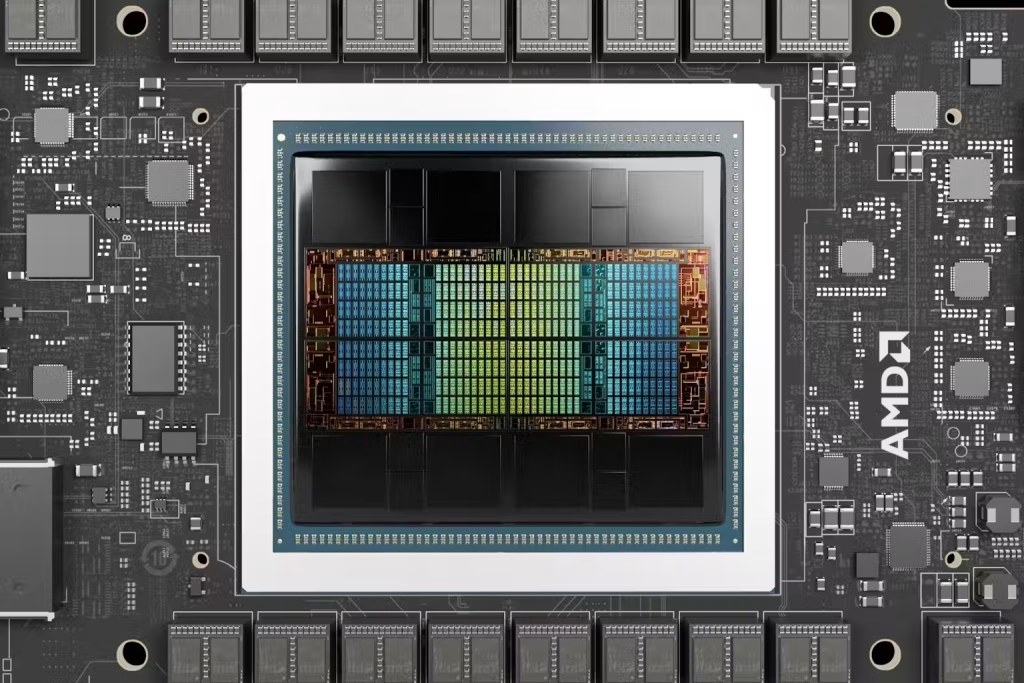

In the rush to deploy generative-AI tools, scale data-centres, and raise billions for AI start-ups, a critical assumption remains largely untested: that the major hardware inputs underpinning this boom — notably high-end GPUs and other specialised accelerators — will retain their cost-structure, depreciation lifecycle and economic-efficacy at scale. Bloomberg notes that while discussion often centres on algorithmic breakthroughs or societal impact, the “mundane” matter of how long AI chips will last and what the true cost of scale is has received scant attention. Bloomberg

In other words: If the industry’s infrastructure cost base, depreciation cycle or amortisation assumptions are wrong, the macro economics of AI may look dramatically different.

Key Themes & Drivers

1. Cost base & depreciation assumptions

At the heart of the article is a warning that many AI-business models assume long lifecycles for expensive hardware (GPUs, accelerators) and that these can be fully amortised across massive workloads. But if hardware becomes obsolete faster, or utilisation falls short, the cost per “use-unit” rises, reducing margin potential. Bloomberg

2. Scale economics vs diminishing returns

Many firms are building “data-centre farms” or “AI factories” with the expectation of linear or better scale economics. But as hardware age, cooling, power, maintenance, floor-space, and software overhead grow, the assumption of improving returns may be overstated.

3. Cycle risk in hardware obsolescence

The AI industry is riding atop a rapid upgrade cycle—new chips, architectures, more efficient models. If depreciation assumptions are too optimistic, firms may face accelerated capex, higher write-offs and margin compression.

4. Valuation risk from underlying infrastructure

Investors may be valuing AI firms on growth narratives and scale—but behind that is the assumption that input costs remain manageable. If that assumption fails, valuations may re‐rate downward.

5. Hidden leverage and credit risk

Because hardware investment is upfront and large, companies may be using debt or leasing to finance GPU/accelerator rigs. If utilisation or lifecycle assumptions fail, those liabilities become risk.

Investment Implications & Opportunities

Opportunities

- Hardware-upgrade specialists: Firms that provide next-generation accelerators, or more efficient chips/architectures that improve cost per compute, may benefit as the industry looks to stretch hardware lifecycles.

- Maintenance/repairs/refurbishment services: As the installed base of AI hardware grows, service-providers offering refurbishment, extended-lifecycle support or second-use markets (e.g., legacy datacentre hardware) may see growth.

- Software/firmware-optimisation firms: Companies that can increase utilization of existing hardware, reduce need for new hardware, or compress model-size without performance loss may capture a cost advantage.

- Alternative architecture players: Firms developing non-GPU acceleration (e.g., analog computing, neuromorphic chips, ASICs for LLMs) might benefit from the cost-strain if standard GPU burn-rates prove too high.

Risks

- AI infra‐heavy firms: Companies investing heavily in GPU farms without clear utilisation or amortisation paths may face margin shocks. Investors should stress-test depreciation schedules.

- Leasing/credit exposure: Firms with large hardware-leases or depreciation assumptions may find themselves over-levered if hardware cycles shorten.

- Valuation downside: If the unproven assumption (that hardware amortises smoothly over many years) fails, AI company valuations may need downward adjustment — especially those priced on aggressive scale‐out assumptions.

- Competitive pressure on cost-per-unit: If hardware costs don’t decline as expected—or conversely if more efficient alternatives out-compete existing architectures—firms may face rapid margin compression.

Portfolio & Tactical Considerations

- Focus on cost-structure transparency: For AI companies in your portfolio, examine disclosed hardware capex, expected depreciation schedule, utilisation assumptions and model-throughput targets.

- Size exposure to infrastructure-heavy names: If a company’s growth relies heavily on scaling hardware, it may warrant smaller sizing or hedging given the unproven assumption.

- Add exposure to service/optimisation players: Consider allocation to firms whose business model is to reduce the cost base of AI (software optimisers, hardware refurbishers, alternative accelerators).

- Monitor hardware cost trends: If GPU prices stop falling, or maintenance/energy costs rise, reassess your growth/margin expectations for the entire AI value chain.

- Maintain stop-loss or hedge for tail risk: The risk of a structural miss in depreciation or utilisation could trigger rapid re-rating; hedging or disciplined sizing may be prudent.

What to Monitor / Milestones

- Hardware capex disclosures and depreciation schedules: Do AI firms publish slower than expected amortisation or accelerated write-downs?

- Utilisation metrics: Are GPU/accelerator clusters operating near design throughput or are they under-utilised?

- New architecture announcements: Are firms switching away from standard GPUs to novel architectures that change the cost base (and invalidate prior assumptions)?

- Hardware cost trends: Monitor GPU/accelerator pricing, energy/maintenance cost inflation, second-use markets for legacy hardware.

- Profitability / margin disclosures: Watch if “cost-per-inference” or “cost-per-training-unit” metrics increase rather than decrease as expected.

- Credit/lease exposure: Check for firms with large hardware leases or debt tied to hardware assets and assess resilience if amortisation fails.

Conclusion

For professional investors, the Bloomberg article is a wake-up call: the excitement around AI growth must be grounded in the economics of infrastructure costs, hardware lifecycle and utilisation. Growth narratives are strong, but the underlying assumption that the infrastructure cost base remains manageable is unproven.

If that assumption fails, the impact could ripple across valuations, margin expectations and credit risk in the AI sector. Conversely, firms that recognise this early and optimise the cost base — whether through new architectures, better utilisation, or diversified hardware strategies — may capture outsized advantage.

In essence: the AI boom isn’t just about algorithms—it’s about cost-efficient infrastructure at scale. Investors who foreground that will be better-positioned; those who ignore it risk being caught in the next wave of disappointment.