OpenAI has reportedly struck deals with Samsung and SK Hynix to provide memory chips to power its forthcoming Stargate infrastructure architecture (likely tied to its next-generation model training or inference systems). This move is more than a supply deal — it signals a shift in hardware stack strategy, vertical integration ambition, and emerging supplier dynamics in the AI compute arms race.

Below is a breakdown of the implications, how I’d think about positioning, and where the risks lie.

Strategic Implications & What This Suggests

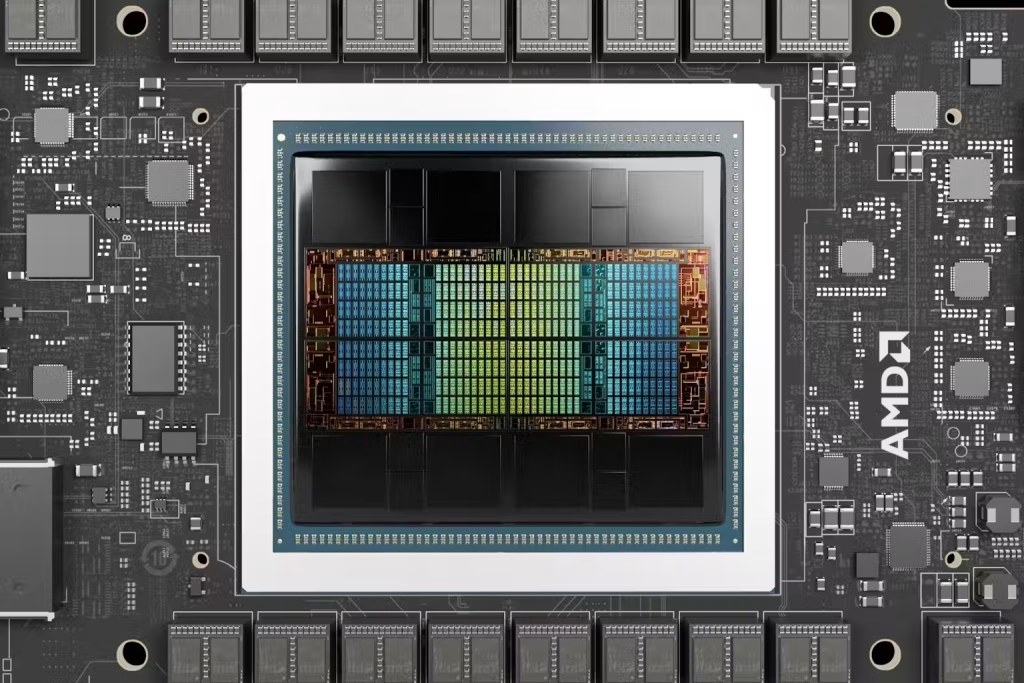

1. Memory is (again) a key bottleneck in AI scaling

The compute arms race increasingly pivots on memory bandwidth, capacity, efficiency, and latency. As models get larger and more complex, memory constraints — how fast weights and activations can be fetched, stored, updated — become critical bottlenecks. By securing memory supply from leading DRAM and HBM vendors, OpenAI is building optionality and resilience in its memory stack.

2. Supply chain alignment & vertical integration

Traditionally, AI compute has been GPU/accelerator-centric. OpenAI’s move toward securing raw memory supply suggests it’s willing to play deeper into the hardware value chain, potentially optimizing memory-AI co-design, negotiating cost structures, and reducing memory procurement risk under scale.

3. Supplier leverage & exclusivity risk

By partnering directly, Samsung and SK Hynix may offer favorable terms (discounts, prioritized allocation, co-development) — but other memory buyers (GPUs, cloud providers, ASIC firms) may feel squeeze or competitive disadvantage. Also, OpenAI might lock in exclusive or semi-exclusive memory configurations (e.g. custom HBM stacks, specialized memory architectures).

4. Memory technology race accelerates

We may see more demand or funding for novel memory types: 3D-stacked HBM, in-memory compute (DRAM + compute), next-gen DDR, resistive RAM (ReRAM), or specialized weights / activation memory. OpenAI’s deals nudge memory vendors to innovate further.

5. Signaling scale commitment for Stargate

Stargate is rumored to be a high-end compute platform or series. By securing key parts now, OpenAI signals serious capital deployment, long-term infrastructure footprint, and ambition to challenge incumbents’ memory/capacity planning.

Investment Plays & Portfolio Positioning

Here’s how I’d lean or reposition exposure around this development:

A. Direct / high-conviction plays

- Samsung / SK Hynix (DRAM / memory vendors)

Memory makers become direct beneficiaries of long-term procurement deals, scale, co-development opportunities, and more stable demand. - Memory stack software / controller IP firms

As memory becomes more specialized, firms that design memory controllers, error correction, compression logic, scheduling, or optimal memory access patterns will matter more. - Heterogeneous compute / accelerator firms

Accelerator or chip vendors with closer coupling to the memory stack (e.g. those designing accelerators that match OpenAI’s memory specs) could benefit from being part of that ecosystem.

B. Adjacent / leveraged plays

- Foundries & packaging / 3D stacking / interposer specialists

Memory + compute integration increasingly relies on advanced packaging (TSVs, interposers, chiplets). Firms that enable memory stacking, interposer, or interconnect will gain. - Memory adjacent tech: error correction, data compression, DRAM features

To manage memory bandwidth and usage, companies providing compression, sparse memory access, caching, error correction, or novel memory optimization tools may see demand.

C. Defensive / hedging moves

- GPU / accelerator firms without tight memory alignment

If memory supply becomes constrained or preferential to OpenAI-aligned vendors, some accelerator players may face supply premium or delay risk; hedging or cautious exposure is prudent. - Memory startups with risky tech bets

Novel memory tech startups (e.g. ReRAM, MRAM) may be over-hyped if incumbents respond faster; limit exposure or deploy softly until adoption is proven.

Risks, Pullbacks & Failure Modes

- Supply disruption / procurement trust risk

Even with agreements, memory supply depends on yield, foundry constraints, geopolitical risk, and trade sanctions. If supply falls short, performance or costing would suffer. - Lock-in and integration overhead

Deep coupling to specific memory vendor stacks may make OpenAI less flexible, and force future architectures to compromise for legacy constraints. - Cost inflation / margin squeeze

Memory is expensive. If memory prices rise (e.g. due to DRAM cycles) OpenAI’s cost base may increase materially, which can stress unit economics. - Competitive memory deals by rivals

If NVIDIA, AMD, Google, or other AI compute players strike their own preferential memory alliances, OpenAI’s advantage may be neutralized or contested. - Technological obsolescence

If newer memory technologies or compute paradigms emerge (e.g. in-memory compute, chiplet designs, optical interconnects), large memory commitments may become burdensome.

Return Scenarios & Valuation Impacts

| Scenario | Assumptions | Expected Outcome | Key Milestones |

|---|---|---|---|

| Base | Memory supply deals hold; OpenAI uses memory efficiently; cost and performance advantage maintained | Moderate margin uplift for memory vendors; upward valuation rerate; incremental ecosystem advantage | Memory usage metrics, contract extensions, co-development announcements |

| Upside | Deep memory-compute co-design breakthroughs, preferential memory supply exclusivity, cost leadership, hardware roadmap advantage | Strong valuation uplift for memory and hardware allied firms; capture of premium memory architectures; possible platform dominance | Product launches, exclusive supply windows, performance breakthroughs |

| Downside | Supply constraints, memory cost inflation, competitor memory alliances, integration drag | Margin compression, supply bottlenecks, competitive disadvantage in compute scaling | Memory delays, public margin erosion, contract disputes |

Signals & Metrics to Track

- Announcements by Samsung, SK Hynix detailing contract size, memory types (HBM, DDR, HBM3e+/4), co-design commitments.

- Equipment and memory supplier capex trends: memory foundry, wafer starts, capacity expansions.

- Memory price trends (spot DRAM, HBM premium spreads) and cost inflation.

- OpenAI’s disclosed compute infrastructure, memory usage per model, performance metrics.

- Competitive memory contract announcements by NVIDIA, Google, AMD, other AI infrastructure players.

- Patent filings or IP developments in memory + AI integration architectures.

Bottom Line

OpenAI’s move to rope in Samsung and SK Hynix into providing memory for Stargate is both strategic and competitive: it shores up a critical downstream dependency, gives hardware/compute leverage, and aligns memory with compute architecture. For investors, the real upside lies in those adjacent layers — memory makers, controller/IP firms, advanced packaging and memory-compute integration tools. But this strategy also comes with supply risk, margin pressure, and inflexibility risk if memory tech evolves faster than commitments. It’s a high-stakes bet, and the hardware stack is the long game.