The European Union has officially enacted regulations banning artificial intelligence (AI) systems that are deemed to pose an “unacceptable risk” to fundamental rights, safety, and democratic integrity. This groundbreaking move sets a new global precedent for AI governance and ethical deployment.

Key Provisions of the Ban

- Restricted AI Applications:

- The legislation prohibits AI systems designed for social scoring, mass surveillance, and predictive policing.

- Emotion recognition in workplace and educational settings is also banned due to concerns over privacy violations and bias.

- High-Risk AI Regulations:

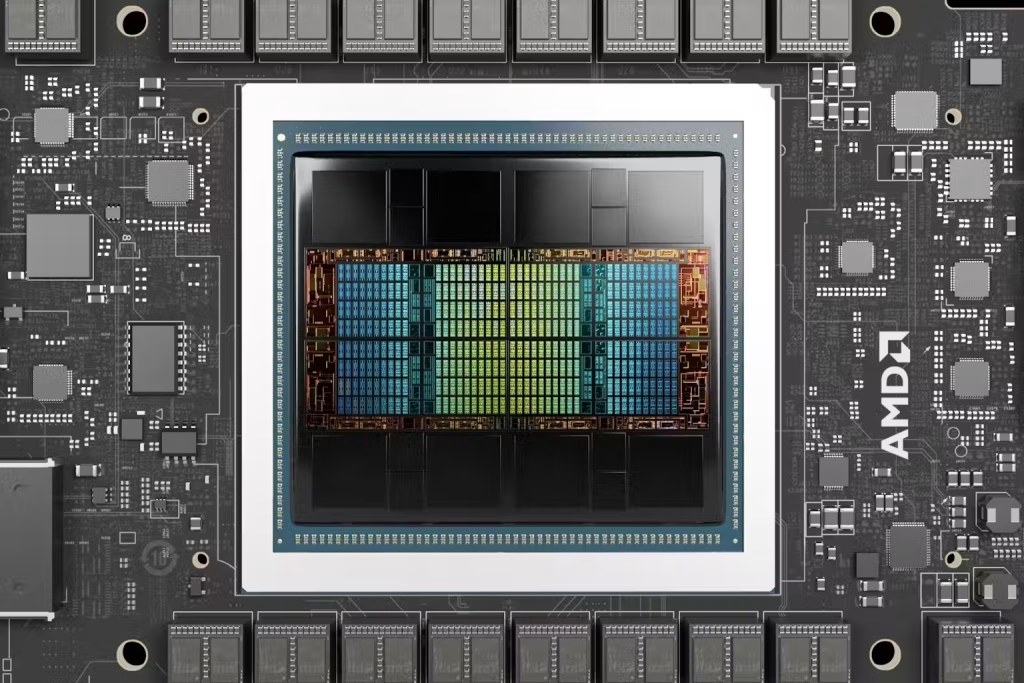

- AI tools used in law enforcement, healthcare, and finance must meet stringent compliance requirements.

- Companies deploying AI in these sectors must conduct extensive risk assessments and ensure transparency.

- Fines for Non-Compliance:

- Violators face fines of up to 6% of global annual revenue, mirroring the penalties under the EU’s General Data Protection Regulation (GDPR).

- Companies must also demonstrate clear accountability mechanisms for AI deployment.

Implications for the AI Industry

- Impact on Tech Giants:

- Major AI developers such as OpenAI, Google, and Microsoft may need to modify or restrict certain AI functionalities within the EU.

- The new laws could lead to revised AI strategies focusing on compliance-first innovation.

- Startup and SME Challenges:

- Smaller AI firms may struggle with compliance costs and regulatory complexities, potentially slowing AI innovation within the region.

- However, ethical AI startups could gain a competitive edge by aligning with EU policies from the outset.

- Global Ripple Effects:

- Other regions, including the U.S. and Asia, may consider similar regulations to ensure AI safety and ethical development.

- The ban sets a benchmark for international discussions on AI governance and human rights protections.

Criticism and Support

- Supporters Argue:

- The ban prevents AI misuse and protects citizens from intrusive surveillance and biased decision-making.

- It fosters responsible AI development by holding corporations accountable for their technologies.

- Critics Claim:

- Overregulation may stifle innovation, making the EU less competitive in AI advancements compared to the U.S. and China.

- Compliance requirements may disproportionately burden smaller firms while benefiting well-funded industry leaders.

Conclusion

The EU’s AI ban marks a significant milestone in global AI regulation. While it prioritizes ethics and human rights, it also introduces challenges for AI developers and businesses operating in Europe. As regulatory frameworks evolve, the balance between innovation and responsible AI governance will continue to shape the future of artificial intelligence.

For more information, visit TechCrunch.