Executive Summary

- Scale mismatch, five‑year clock: OpenAI is running at roughly $13B ARR today—~70% consumer—but has mapped out five years to stand up $1T‑plus in compute/infrastructure commitments. That gap is forcing a pivot from a single‑product subscription engine to a multi‑line, capital‑markets‑enabled business plan. Sources: TechCrunch, Oct 14, 2025; Financial Times, Oct 15, 2025; Reuters, Oct 15, 2025.

- How they’ll try to bridge it: New lines include government & enterprise services, shopping/ads, video (Sora), agents, potential consumer hardware, and even selling compute from the Stargate data‑center program—alongside debt partnerships and further fundraises. Source: Financial Times, Oct 15, 2025.

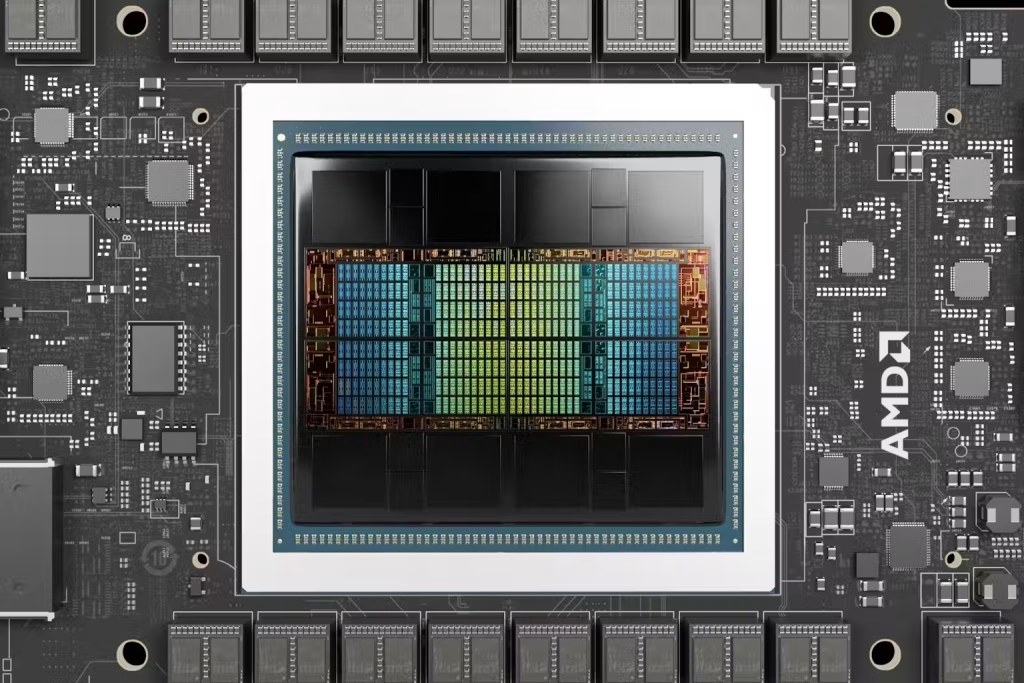

- Capex reality: OpenAI has locked in ~26 GW of accelerator capacity across Oracle/Nvidia/AMD/Broadcom and is party to a reported $300B/5‑yr Oracle cloud contract—underscoring energy‑bounded compute economics and the need to reduce $ per token via custom silicon, packaging, and networking. Sources: TechCrunch, Oct 14, 2025; Wall Street Journal, Sep 10, 2025.

- Positioning map: We favor the AI infrastructure bottlenecks (advanced packaging/foundry, optics/switching, power & liquid cooling) and select cloud capacity owners with visibility, while treating OpenAI‑dependent revenue at vendors as execution‑gated rather than annuity‑like. Context: Reuters, Oct 15, 2025; FT, Oct 15, 2025.

What happened (facts)

- TechCrunch’s read (citing FT): OpenAI’s ARR ≈ $13B, with ~70% from $20/mo ChatGPT subscriptions; ChatGPT ≈ 800M regular users with ~5% payers. Management is pursuing a five‑year plan to stand up new revenue lines and financing routes to support $1T‑plus of compute/infrastructure spending. Sources: TechCrunch, Oct 14, 2025; Financial Times, Oct 15, 2025; Reuters, Oct 15, 2025.

- Commitment scale: 2025 dealflow includes 10 GW Nvidia (LOI), 6 GW AMD, 10 GW Broadcom custom racks(inference‑tilted) and the reported $300B/5‑yr Oracle contract that starts mid‑decade—collectively implying multi‑hundred‑billion capex and opex. Sources: TechCrunch, Oct 14, 2025; WSJ, Sep 10, 2025.

- P&L color: FT says H1‑2025 operating loss ≈ $8B; management argues falling unit compute costs plus diversified revenue can narrow cash burn as deployments shift from training‑heavy to inference‑heavy workloads. Source: Financial Times, Oct 15, 2025.

How we read it (mechanism & context)

The core dynamic is energy‑ and capital‑bounded compute. If OpenAI can compress $ per inference faster than customer willingness to pay erodes, the model scales; if not, gross margin is capped by power, silicon yield, packaging, and fabric efficiency. That’s why the stack is going multi‑vendor (Nvidia for training cadence, AMD for supply/pricing optionality, Broadcom for custom inference), and why the plan includes selling compute (Stargate) rather than only buying it. Sources: TechCrunch, Oct 14, 2025; FT, Oct 15, 2025.

We also see systemic second‑order effects: huge AI‑campus builds are pulling in private‑equity infra and strategic consortia (e.g., the $40B Aligned Data Centers acquisition) to control power‑dense capacity. This is a real‐asset story as much as a chips story. Source: Reuters, Oct 15, 2025.

Investment implications — where we’d look (and why)

1) Advanced foundry & packaging (12–36 months)

Thesis: Leading‑edge wafers and 2.5D/3D packaging (CoWoS/SoIC equivalents) remain the binding constraint for every accelerator roadmap. As OpenAI’s partners push to cut $ per token, the profit pool tends to accrue upstream to packaging and substrates.

KPIs: Packaging lead‑times, substrate capacity adds, yield disclosures. Context: FT on cost‑down imperative, Oct 15, 2025.

2) High‑bandwidth optics & switching (6–24 months)

Thesis: Wiring tens of thousands of accelerators advantages vendors in 800G/1.6T optics, switch/NIC silicon, and low‑latency Ethernet/IB fabrics. As clusters scale, network spend mix rises; Ethernet‑forward inference designs (Broadcom lane) are a tailwind.

KPIs: Port‑to‑optics attach rates, fabric latency/throughput at cluster scale, order intake.

3) Power & thermal (6–36 months)

Thesis: The non‑chip bill—power distribution, switchgear, UPS, liquid cooling, heat rejection—is now a $15B‑per‑GW‑type line item on some builds. Owners of modular liquid‑cooling, MV equipment, and grid interconnect services are positioned for multi‑year demand.

Catalysts: Site‑level PPAs, utility interconnect approvals, liquid‑cooling design wins. Context: FT/TechCrunch framing of GW‑scale rollouts, Oct 14–15, 2025.

4) Capacity owners with durable economics (12–60 months)

Thesis: Clouds and private AI campuses that secure cheap power and balanced customer mix (not overly single‑tenant) can monetize AI demand even if unit pricing normalizes. The Aligned deal shows the capital stack deepening.

Watch: Pre‑leased MW, renewal spreads, power cost pass‑through, asset‑level leverage. Source: Reuters, Oct 15, 2025.

5) Semis — stock‑picker’s lane (ongoing)

Thesis: We’d treat OpenAI‑exposed orders at Nvidia/AMD/Broadcom as execution‑gated (tape‑outs, packaging allocation, delivery windows). Favor names with backlog diversity beyond a single mega‑customer and with networking/packaging exposure vs. pure chip ASPs.

Catalysts: Nvidia/OpenAI 10 GW LOI checkpoints; AMD MI450 1 GW in 2H‑2026; Broadcom custom inference racks ramp 2026–2029. Context from recent partner announcements, Oct 2025.

6) Software monetization adjacencies (0–24 months)

Thesis: If OpenAI doubles payers (from ~5%) and adds ads/commerce and B2B/Gov attach, the revenue mix gets sturdier. Vendors plugging into agents, shopping, and video workflows could see API spend uplift even if headline “AI budgets” flatten. Source: Financial Times, Oct 15, 2025.

Catalysts & timing

- Near term (Q4‑2025/Q1‑2026): More detail on financing structure, debt partnerships, and customer pipelinesfor government/enterprise; partner updates on hardware ramps. Sources: FT, Oct 15, 2025; TechCrunch, Oct 14, 2025.

- 2026: AMD 1 GW tranche and initial Broadcom racks roll out; validation of Oracle contract milestones and power siting progress. Sources: TechCrunch/WSJ, Sep–Oct 2025.

Scenarios (12–36 months)

- Base (~55%): “Scale the stack” — OpenAI executes on new lines; unit compute costs fall on schedule; vendor ramps land within power constraints; infra names and networking/packaging outperform broader semis.

- Bull (~25%): “Flywheel engages” — Payer share doubles, ads/commerce click, inference mix rises; time‑to‑solution improvements widen moat; capacity owners achieve tight utilization at improving marginal economics.

- Bear (~20%): “Funding friction” — Packaging or power siting delays push deployments right; macro/credittightens; large contracts are re‑timed; OpenAI‑concentrated suppliers underperform while diversified infra wins on mix.

Risks & what could go wrong

- Financing & counterparty risk: The $300B/5‑yr Oracle exposure and partner capex plans magnify single‑customer dependence and funding risk; any slippage could ripple across vendors. Source: WSJ, Sep 10, 2025.

- Manufacturing/packaging bottlenecks: CoWoS/3D packaging scarcity and yield variability remain gating factors; delays cascade into missed compute milestones.

- Policy & siting: Power interconnects, cooling permits, and geopolitics (export controls) can defer ramps or raise TCO.

- Competitive response: Incumbent clouds or model labs could price‑discipline the market or out‑execute on agents/ads/enterprise, compressing OpenAI’s monetization window.

- Execution risk at scale: Multi‑vendor hardware programs add integration and software fragility; misses can inflate $ per token.

What we’re watching (KPIs)

- Paying‑user mix (payers as % of active users) and ARPU trends.

- Compute unit costs (tokens per $ / $ per 1M tokens) and inference vs. training mix.

- Packaging lead‑times and substrate supply; optics attach and fabric utilization at cluster scale.

- Contract milestones: evidence that Oracle $300B ramps, AMD/Nvidia/Broadcom programs hit dates.

- Power: energized MW/GW vs. announced GW; liquid‑cooling adoption rates. Sources throughout above.

Sources

- TechCrunch — “OpenAI has five years to turn $13 billion into $1 trillion” (Oct 14, 2025).

- Financial Times — “OpenAI makes five‑year business plan to meet $1tn spending pledges” (Oct 15, 2025).

- Reuters — “OpenAI makes five‑year plan to meet $1 trillion spending pledges, FT reports” (Oct 15, 2025).

- Wall Street Journal — “Oracle, OpenAI sign $300 billion computing deal” (Sep 10, 2025).

- Reuters — “BlackRock, Nvidia‑backed group strikes $40B Aligned Data Centers deal” (Oct 15, 2025).

Bottom line (how we’d act)

We own the bottlenecks—packaging/foundry, optics/switching, and power/cooling—and treat OpenAI‑linked demand as probable but not prepaid. Capacity owners with cheap electrons and balanced tenant mix look attractive; in semis, we bias toward networking/packaging exposure and diversified backlogs while gating any OpenAI‑heavy theses to execution milestones and evidence of payer‑mix expansion.