What’s New

- OpenAI and Nvidia plan to announce billions of dollars in investment toward UK data center infrastructure.

- The announcement is expected next week, coinciding with U.S. President Donald Trump’s visit to the UK to meet with Prime Minister Keir Starmer.

- They’re partnering with a UK-based firm, Nscale Global Holdings, which has previously committed to significant AI infrastructure development in the country.

- The deal reflects growing demand for AI compute capacity and cloud infrastructure, including sovereign AI infrastructure (i.e. infrastructure that provides local control over AI tools, data, and hardware).

Strategic Context

- This move fits a broader global trend: tech giants and governments are pushing to build local, high-capacity AI infrastructure — partly for performance, partly for security, partly to reduce dependency on foreign cloud and data providers.

- The UK government has expressed interest in boosting AI infrastructure, including through incentives like AI Growth Zones and relaxed planning for data center builds.

- Previous projects (like “Stargate” in Northern Europe) show that OpenAI + Nvidia are already working on similar large-scale data center / AI compute hubs that emphasize renewable energy, sustainability, and scale.

What Investors Should Focus On

Here are angles and potential implications worth tracking:

| Factor | What to Watch / Why It Matters |

|---|---|

| Scale & Location | Where will the data centers be located, and what power / energy availability is there? The electricity cost, grid reliability, and regulatory environment will materially affect operational margin. |

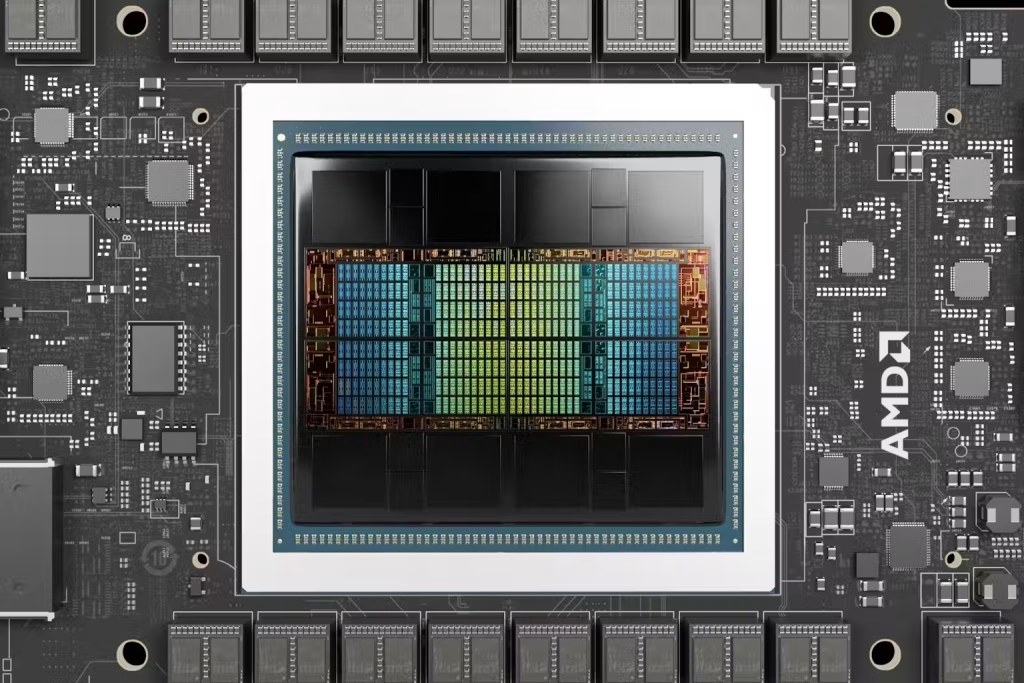

| Hardware Capacity | How many GPUs, what generation, and the timeline for deployment will matter. Newer, more efficient hardware reduces total cost of ownership and improves competitive positioning. |

| Government Support & Regulation | Incentives, permitting, energy policy (cost, carbon emissions, renewables), and local regulation will influence how fast and cost-effectively these data centers can be built. |

| Revenue Models & Partnerships | Are these data centers being built for OpenAI/Nvidia internal needs (compute & AI training), or will they serve third-party clients (cloud, enterprise, public sector)? The mix affects margin, utilization, and risk. |

| Sovereign Control Concerns | “Sovereign AI infrastructure” is increasingly appealing to governments. Companies able to offer assurances over data privacy, sovereignty, uptime, and regulation may win premium contracts. |

| Energy & Sustainability | Data centers are energy-intensive. If the build can leverage renewable supply, efficient cooling, favorable energy tariffs, carbon-neutral or low emissions operations, it could enhance long-term viability. |

| Competition & Supply Chain Risk | Building large compute facilities depends on supply of GPUs, semiconductors, people, power hardware, etc. Bottlenecks in any of those could slow deliveries or raise costs. Also, local competition (other hyperscalers or cloud providers) may increase. |

Risks & Challenges

- Planning, permitting, and securing reliable energy supply are often the biggest hurdles for these types of large data center projects. Delays and cost overruns are typical.

- High upfront capex, long payback periods: these projects must be carefully underwritten, especially in terms of power costs, maintenance, and cooling infrastructure.

- Regulatory headwinds or local opposition (for example around land, electricity consumption, environmental impact) may slow or complicate builds.

- Technology evolution risk: rapid advances in hardware (e.g. newer GPU architectures, better cooling, more efficient chips) could make earlier investments less efficient over time unless they’re future-proofed.

Takeaway

This planned investment is a strong signal that AI infrastructure continues to be a core battleground globally. For the UK, it represents a commitment to hosting more of that infrastructure locally. For investors, it suggests opportunity in:

- Data center real estate and operations

- AI hardware supply chain (GPUs, cooling, energy management)

- Cloud / edge computing providers

- Companies that can partner / provide services around AI infrastructure (networking, security, regulatory compliance)

It also means that those who get involved early — either through direct investment, partnerships, or supplying infrastructure components — may benefit most, provided the execution risks are managed well.